Manufacturing Emotional Intelligence

- Media Society Culture

- Apr 12, 2021

- 4 min read

Updated: May 21, 2021

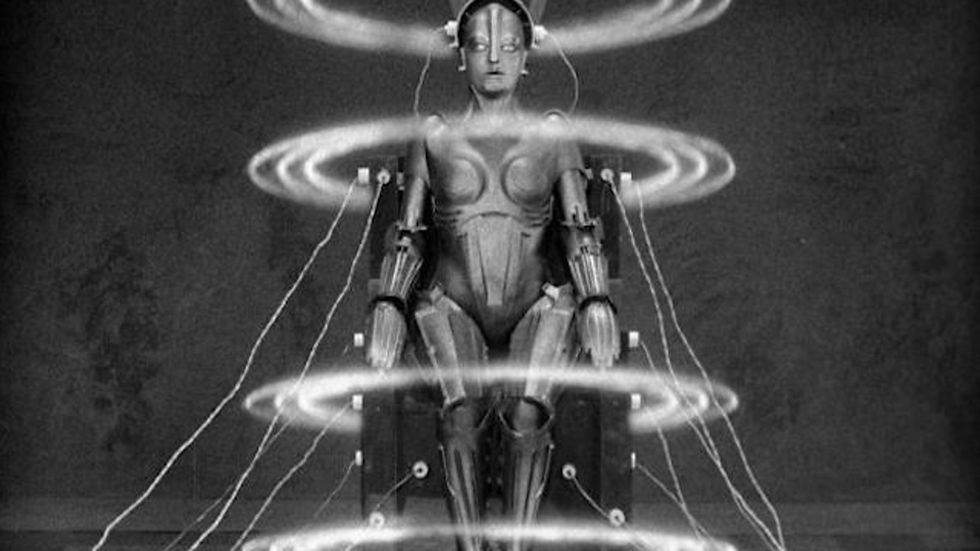

This second post in my short series looking at artificial intelligence and its role in society will consider the ways in which we interact with machines and question their capacity to ever truly understand our emotions and feelings. From the 1960s to the modern day, how much progress has been made, and what might the future hold when it comes to manufacturing an understanding of human emotions?

In 1964 Joseph Weizenbaum at MIT began work on what would become ELIZA - the world’s first chatbot. Weizenbaum’s work on ELIZA led to his reputation as the modern father of Artificial Intelligence. ELIZA was a computer programme designed to act like a therapist, responding to the emotional input of a human user in such a way that would facilitate the person opening up and speaking through their concerns or problems.

ELIZA ran a script called DOCTOR which found key words in a human’s input, and output a response using that information in a broadly open ended way. This broad strokes script mirrored a humanistic patient centred approach to talking therapy in which the patient does the majority of the talking. An online version of ELIZA is still available today.

I decided to give it a go:

Well that escalated quickly!

ELIZA was not in the strictest sense Artificial Intelligence. It's programming used a selection of schemas and keyword associations to spit out pre scripted responses which, themselves were designed to be fairly open ended and to put the onus of the conversation back on the ‘patient’. However, it is reported that a number of people who interacted with ELIZA in 1966 felt a connection to the machine (99% Invisible, 2017). The experiments with ELIZA were so impactful that they bred what was known as the ELIZA Effect. The ELIZA Effect refers to the phenomenon when people feel emotionally invested in the interactions they have with a machine, believing the output to be far more meaningful than it is. This is somewhat different to the Turing test which a machine is said to have passed if and when a human interacting with it is unable to detect that they are speaking with a machine.

Weizenbaum on Artificial Emotional Intelligence

In the years after the creation of ELIZA, Weizenbaum wrote about the limitations of the program, responding to those who thought ELIZA represented the future of mental health therapies. Doctors Colby, Watt and Gilbert (1966) felt that ELIZA promised a future where ‘several hundred patients an hour could be handled by a computer system’. Weizenbaum held that ELIZA was merely ‘an actress’ who had command of a set of rules which permitted a level of improvisation. Weizenbaum compares people’s desire to see more emotional intelligence in ELIZA as the ‘conviction many people have that fortune tellers really possess deep insight’ (Weizenbaum, 1976:189). Furthermore, Weizenbaum critiqued the very idea that a machine could replace a mental health professional, or that a mental health professional would endorse this as Colby et al. did:

"What can the psychiatrist's image of his patient be when he sees himself, as a therapist, not as an engaged human being acting as a healer, but as an information processor just following rules?" (Weizenbaum, 1976:6).

Woebot - A 21st Century ELIZA?

Technology has advanced considerably since Weizenbaum’s book in 1976 and while AI has not replaced face to face human based mental health care, it does sit alongside it in some cases.

One such example is Woebot. Woebot is a chatbot that helps users monitor their mood through brief daily conversations. Woebot’s programming is built around techniques used in cognitive behavioural therapy frameworks. Using Natural Language Processing (NLP) Woebot will try to adapt to the emotional state of the user. The programme uses a rules based protocol but it has a degree of flexibility to meet the needs of users. It can adapt to responses to target a range of needs including sleep optimisation, goal setting, grief and relationship troubleshooting. While Woebot is more advanced and nuanced than ELIZA, it is notably still running a set of rules to respond to user input in a scripted way. It is no closer to truly understanding human emotion itself.

Perhaps instead, the no doubt vast amounts of data that Woebot now has from human input can be used to help mental health professionals understand patterns in people’s emotional responses. This is something that Professor Jacob Friis Sherson at Aarhus University feels is on the horizon when it comes to AI being used to understand human emotion.

“[There may be] an optimisation advantage to putting feelings into algorithms and if that’s the case, then we are seeing a future where humans and computers may look more like each other.” (Friis Sherson, 2020, Almost Human)

The Future of Artificial Emotional Intelligence

Professor Johanna Seibt works in the department of transdisciplinary process studies for integrative social robotics at Aarhus University. She argues that we have a human desire to project ourselves into the future through AI with ‘a mixture of delight and horror’. We see this excitement and fear in fictional representations, media coverage and academic critique.

A lot has changed since ELIZAs creation in 1964. In addition to mental health chat bots like Woebot, socially assistive robots are proving to be helpful in a range of settings and situations. From helping children with autism develop their social skills to supporting eldery people with companionship and memory it’s clear that whether or not AI entities themselves can truly understand our human emotions, they are perhaps helping us understand ourselves a little more.

Sources

Colby, K. M., Watt, J.L., & Gilbert, J. A. (1966) A Computer Method of Psychotherapy: Preliminary Communication. The Journal of Nervous and Mental Disease. Vol 142 (2), pp. 148-152

Friis Sherson, J. in Rønde, J. (Dir.) (2020) Almost Human [film]

Radiotopia, 2019. The ELIZA Effect. [podcast] 99% Invisible. Available at: <https://99percentinvisible.org/episode/the-eliza-effect/> [Accessed 11 April 2021].

Seibt, J. in Rønde, J. (Dir.) (2020) Almost Human [film]

Weizenbaum, J. (1976) Computer Power and Human Reason: From Judgement to Calculation. W.H Freeman & Co.: New York

Comments